FlipSide

Addressing Misinformation on Short-Form Video Platforms With a New Interaction Pattern

Problem

Short-form video platforms are popular among young adults, but their design, combined with AI tools, may amplify the spread of misinformation.

Response

Prompt skepticism in the moment by highlighting when a creator makes a claim, presenting responses to the claim, and revealing the creator's idea network.

My Role

User Research

Co-designed research plan. Derived insights from research data using thematic analysis. I also facilitated many of our interviews.

Ideation & Convergence

Led ideation sprints.

Led down-selection workshops, based on research findings and relevant frameworks.

Explanation & Storytelling

Created high-quality visuals to pitch ideas.

Interactive Prototyping

Created various rapid prototypes to test specific interactions, and implemented a final functioning prototype of the end-to-end user flow.

Design System

Developed and documented our design system.

Video Editing

Planned, shot, and edited all of our explanatory videos.

Project Brief

Context

My team worked with an advisor from Google News.

We were given an open-ended brief to design for the near future of the "information ecosystem."

Short-form video + young adults

Problem Definition

Young Adults are Particularly Vulnerable to Misinformation on Short-Form Video Platforms

We were also interested in how the recent proliferation of generative AI tools will impact these platforms in the next few years.

Rather than exploring the entire universe of information, we chose to hone our research by focusing on health and wellness misinformation. This type of information is important to get right, and there is a great deal of misinformation in this space.

Objectives:

Understand how young adults choose to trust information online

Identify opportunities to reduce trust in misinformation

Develop a response to help young adults recognize misinformation

Research Planning

Research Question

How do young adults build information trust* with health & wellness information on short-form video platforms that host significant AI-generated content?

Secondary Research—Selected Sources

Practicing Information Sensibility: How Gen Z Engages with Online Information

by Amelia Hassoun et al.

You Think You Want Media Literacy… Do You?

by Dana Boyd

Encounters with Visual Misinformation and Labels Across Platforms

by Emily Saltz et al.

Research Process

Literature

Review

SME

Interviews

Remote Mobile

Ethnography

Semi-Structured

Interviews

Contextual

Inquiry

Creating a Research Plan

I co-created and edited our research plan. We set out to understand how young adults build information trust online today, and what changes we might expect with the presence of significant generative AI content.

Generative Research

Recruitment

We recruited young adults aged 18–24. Because of our compressed timeline, we didn't want to interview minors, as that would require a greater level of care. We pre-screened for usage of short-form video apps, familiarity with health and wellness content, and other factors.

I designed a call for volunteers which we posted on Instagram, Reddit, Discord, and other social media sites.

Methods

Remote Mobile Ethnography

Prior to the interview, participants shared 3-5 short videos or creators that influenced their understanding of health and wellness.

The information collected from the pre-interview questionnaire informed the customization of the semi-structured interview questions in our second stage.

Semi-Structured Interview

We discussed the videos participants had shared. We sought to understand how participants evaluated credibility and their methods for verifying information in the context of these videos.

We also probed participants with hypothetical future AI scenarios, to gauge their feelings about GenAI.

Contextual Inquiry

We observed as participants navigated their preferred short-form video platform while screen sharing. We asked them to "think aloud" as they evaluated the trustworthiness of videos in real time. This process let us identify factors influencing their trust that may have been missed in their articulated understanding.

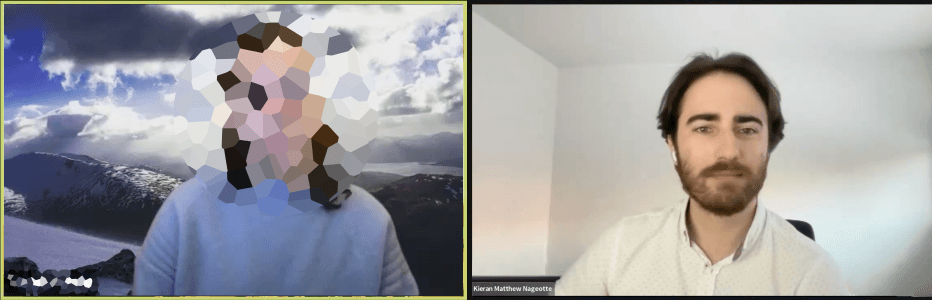

Conducting Interviews

I facilitated many of our interviews. I had prior interview experience from my 5 years designing physical products.

Analyzing the Data

Axial Coding & Thematic Analysis

To analyze our data thoroughly and to generate the most valid results, I wanted to perform a thematic analysis. This rigorous method allowed us to delve into complex and multi-layered perceptions, beliefs, and attitudes surrounding trust in short-form video. We started by individually open coding two interviews, resulting in a collection of about 150 deductive codes. We coded “blind” in order to avoid influencing one another during this initial process, to generate as much novel insight as possible.

We then proceeded to axial coding, grouping our initial codes into 26 broader categories. This process required multiple coding rounds, both individually and as a team, to refine our categories. When it came to choosing our themes, we ensured they were recurring in the data, and were not simply confirming pre-existing expectations. Once we finished our codebook, we had at least 2 team members code each interview to increase the reliability of our analysis.

Discoveries

Opinions not facts...?

Young adults see short-form videos as reflective of individual opinions and experiences rather than objective facts.

But is that actually the case?

“I wouldn't say that it is or is not trustworthy. I feel like a lot of this stuff is like people talking about their own experiences... it's kind of like the nature of TikTok as a medium.”

– P2, on learning new “insider” information on Instagram Reels

Support + affirmation from strangers like me

Secret knowledge, as a motivation & trust builder

Young adults’ sense of belonging to a distinct community / in-group is enhanced by creators who provide exclusive insights, things that seem like “hacks,” or taboo or fringe subjects.

“Whether it's like beauty, workout, jobs, it's kind of nice when people don't gatekeep the information that they have and you're like being let into a little secret club.”

– P1, on learning new “insider” information on Instagram Reels

“Social” media is a misnomer

Participants browse social media passively, reading comments to validate content, but often refraining from commenting, messaging, or sharing.

“I just go on the comments to see if someone's agreeing with me. I like it real quick, but I don't comment. I've never commented, ever.”

– P7, on how they engage with content on TikTok

Pure motivations vs. factory-produced AI

Young adults perceive AI-generated content as profit-motivated and mass-produced, lowering trust in this content because the creators' motivations are suspect.

It's the most authentic platform. It's the least produced, in the moment content. So seeing something that feels like it belongs on TikTok, but actually being AI generated just feels very wrong.

– P5, on why they find TikTok valuable

Personal experiences vs. AI

Undisclosed AI generated scripts or avatars diminish the perceived authenticity of creator stories, leading to perceived deception, and the distrust of platforms.

“When talking about something super personal and specific it kind of feels a bit too vulnerable because generative AI isn’t being vulnerable back. With TikTok there is a shared vulnerability.”

–P7, on personified AI feeling creepy

Design Takeaways and Opportunities

One major opportunity was to create features to help evaluate the credibility of content creators or the information shared. The ideas we had at the time were a verification system, a rating system, transparency about the source of the content (termed “information provenance”) or some other intervention.

We need to ensure that high-quality, factual content feels authentic, and therefore trustworthy.

Our research highlighted the importance of critical thinking in the era of mass content production. However, media literacy-based frameworks have so far failed to live up to their promise. How can we heed the warning against “assert[ing] authority over epistemology” ⁵, while helping young adults resist misinformation?

We set out to build a future vision prototype that addresses the presence of misinformation on YT Shorts, TikTok, or a new platform.

Ideation

Design Challenge

"How might we make thinking critically about short-form videos not feel like work?"

Ideation 1

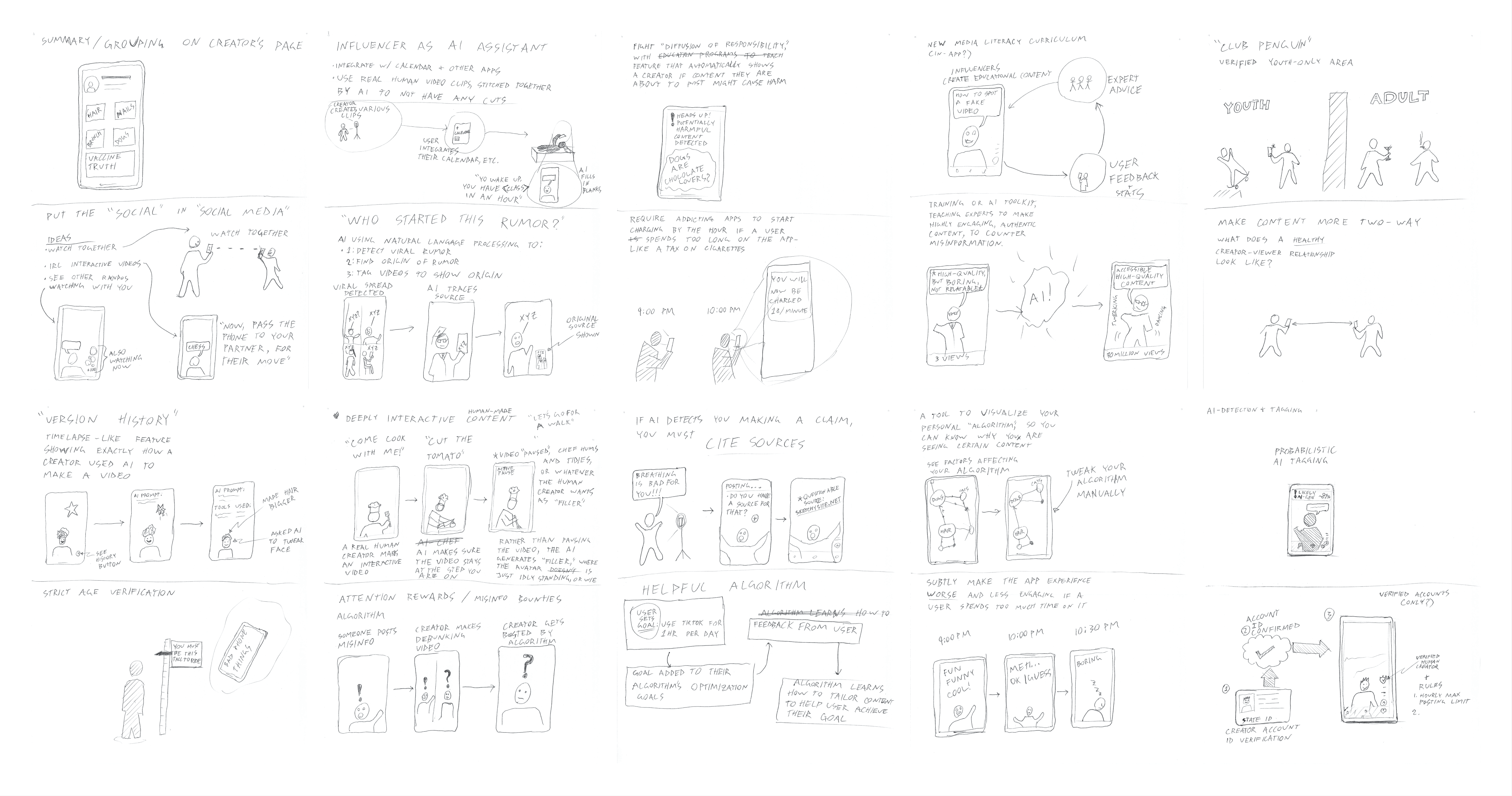

Using AI to fight misinformation

We started by individually sketching out several ideas. I used insights from our research to inform my approach.

I thought about future AI use cases; how it might be more seamlessly integrated into people's lives. For instance, I imagined content that let you ask questions of a simulated avatar of the original content creator.

From this concept, the seeds of our final direction emerged. I thought about how AI could be used to summarize a creator's content to let you judge their output as a whole, before getting sucked in.

Initial ideas based on thoughts during research.

Convergence 1

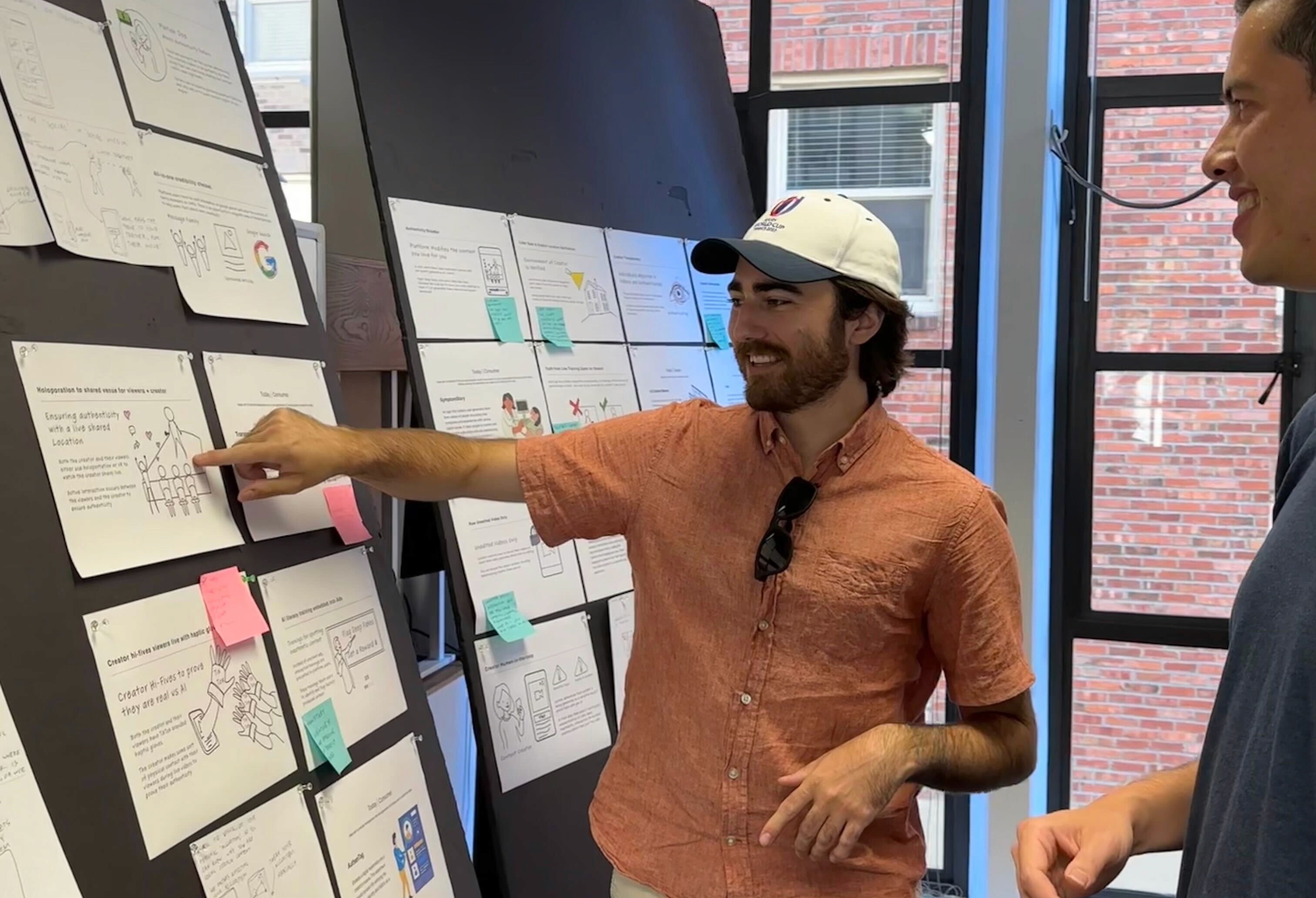

Downselection Considerations

We put all our sketches on a board and critiqued one another's ideas.

I evaluated concepts on multiple axes:

Desirability, based on our findings about users' preferences and needs.

Potential for harm—to the user, to others, to the platform, and to society

Impact on the platform, if the idea were implemented.

Feasibility, based on what we know and learned about the constraints on users, platforms, and technology.

Discussing an idea with my teammate.

Ideation 2 + Frameworks

Design Principles + Stakeholder Map + Speculative Futures

Design Principles Workshop

I led our team in a workshop to create design principles for our product. I was inspired by an article by Ethan Eismann, VP of Design at Slack, describing his approach to creating good design principles.

I adapted this method for our team's workshop, because the rigorous process would force us to be explicit with our rationale for each step.

Stages of the design principles workshop I adapted and led.

Design Principles:

Do No Harm

Platforms, content creators, AI tool makers, and designers are accountable if they host, create, enable, or share harmful content

Help the User be their best self

Adapt to satisfy people’s goals, not just their short-term preferences / instant gratification

Distinguish Fact From Opinion

Clearly highlight claims of fact vs. opinions, and reduce barriers to skeptical inquiry and fact checking

Low-Friction

AI is magically good at guiding the user through their journey, catering to their personal needs

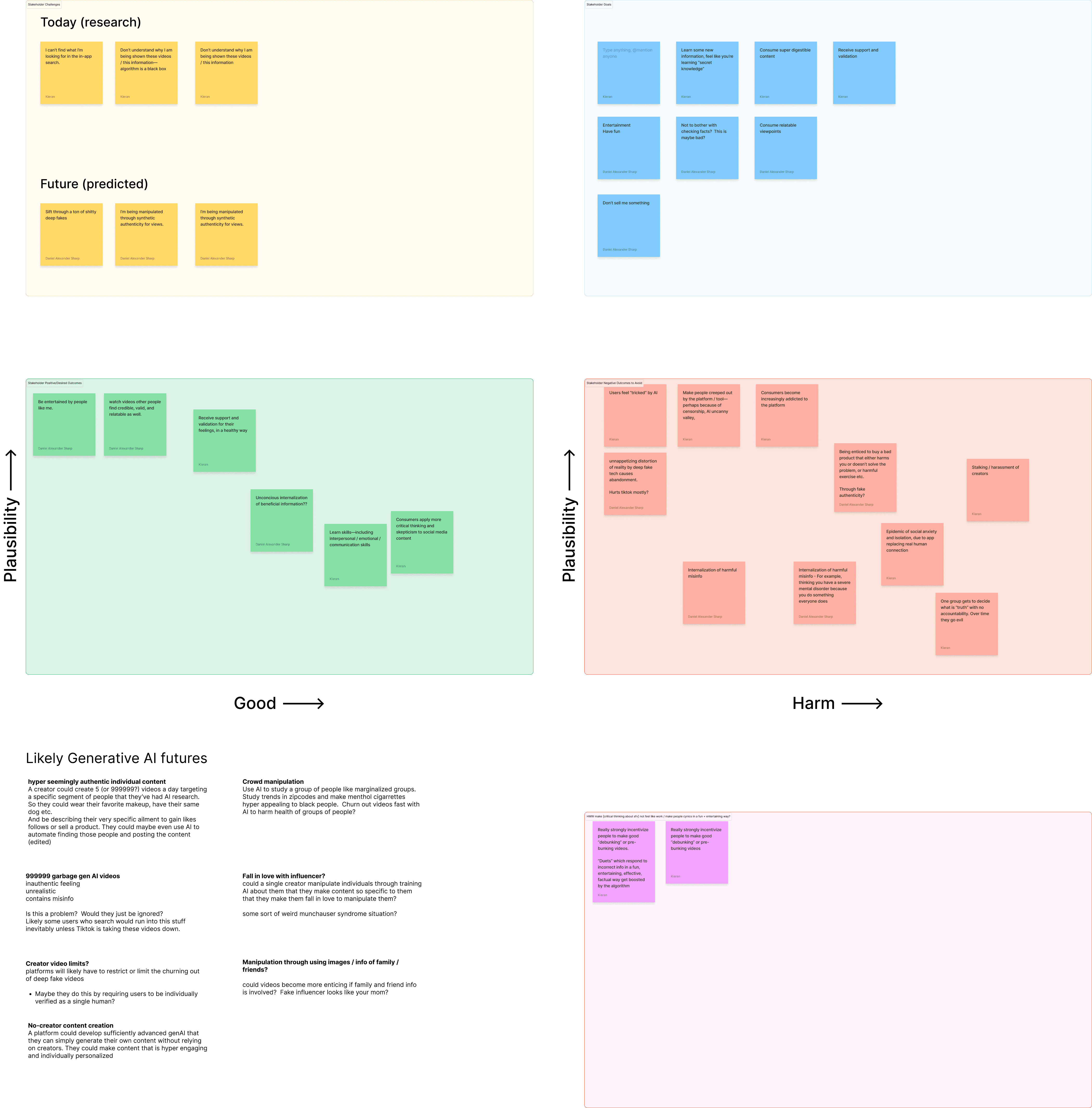

Stakeholder Map + Speculative Design Workshops

I also led our team through creating an updated stakeholder map to use for ideation and selection. We agreed to keep in mind both Content Consumer and Content Creator user archetypes.

I then led us through a speculative design exercise—we're designing for the future, but how do we know what that will look like?

Drawing on inspiration from Frog Design and Speculative Everything, I created a process for the team to imagine different futures. We placed scenarios we imagined from these futures on a chart plotting Utopic vs. Dystopic, and Likely vs. Unlikely futures.

Based on these exercises, we generated many more ideas.

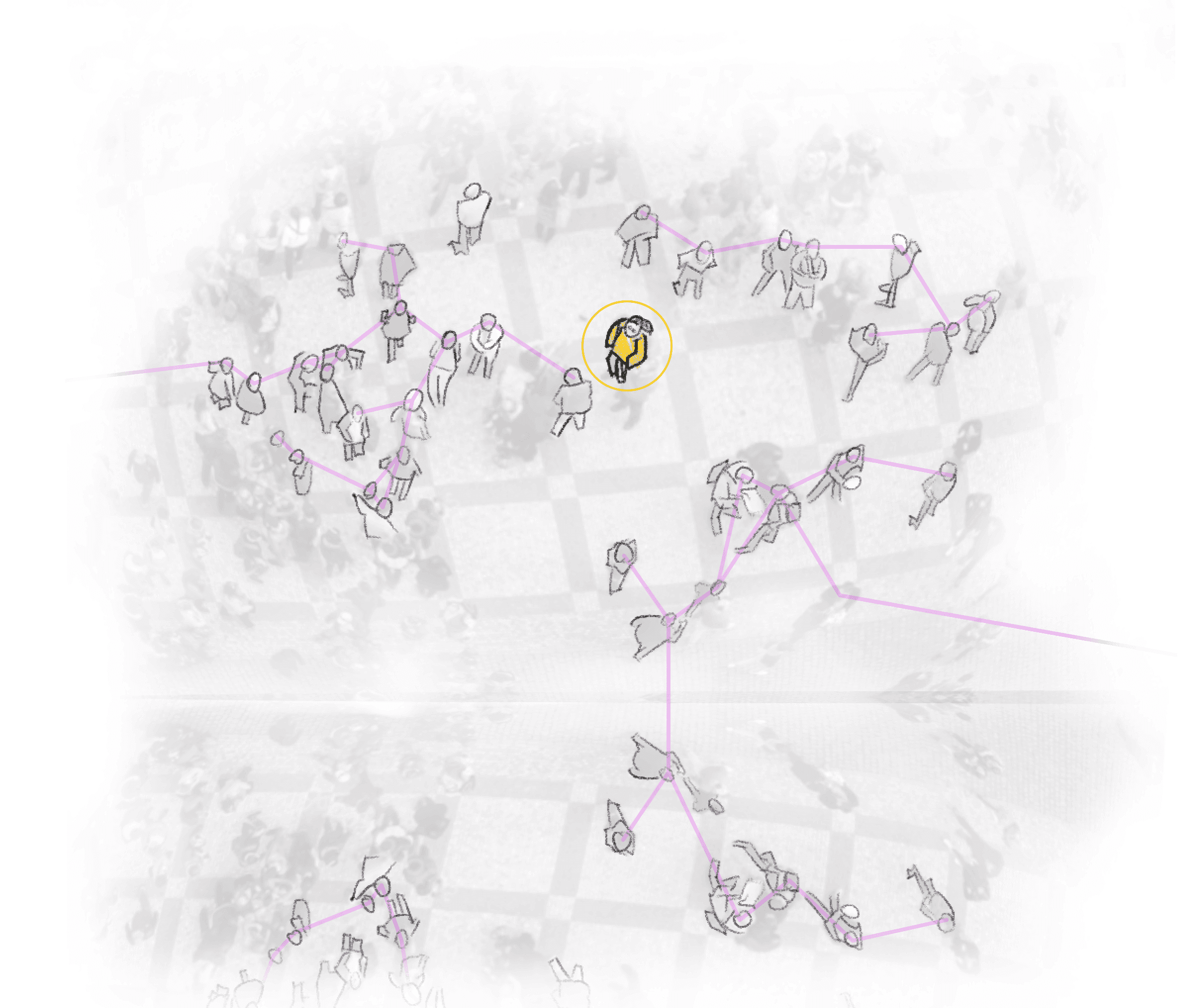

Stakeholder Map.

Speculative Futures.

Finding Direction

Plotting user needs, our desired and undesired outcomes, and futures.

An idea I had to call out when a claim is made—this 'napkin' sketch ended up becoming core to our response.

Convergence 2

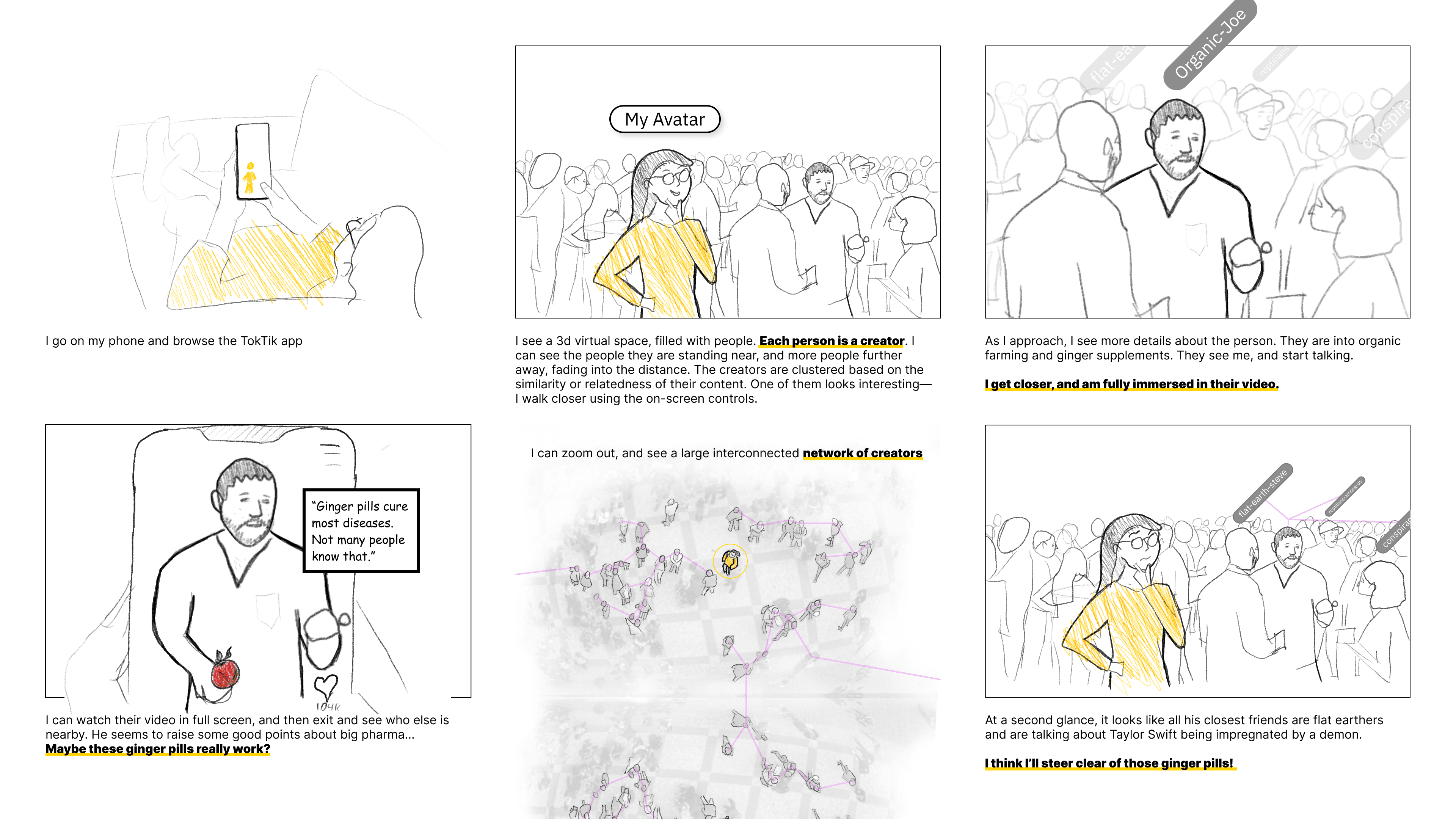

The House Party Metaphor

Q: When do we have our 'skeptical armor' up in real life? When do we evaluate a person's credibility?

A: When we're approached by a stranger. For instance, on the street, at a conference, or at a party.

Say you're at a house party, surrounded by unfamiliar people. They stand around in small groups, each chatting about their interests. Who do you approach?

Based on this concept, I realized that we could help users engage their critical thinking by showing the kind of "crowd" a content creator is associated with.

I drew this storyboard to explain.

Concept Summary: Use AI to understand a creator's views and claims, and what kinds of people they are associated* with. Present this information to the user to prompt a moment of critical judgement.

* "Associated" here doesn't just mean the people the creator has "friended," but also those that have similar views, or are otherwise thematically or semantically related, according to our proposed natural language processing and semantic analysis algorithm (functioning somewhat like current recommendation algorithms).

Final Direction

Highlight Creators' Claims + "Associations"

After discussing with my team and receiving feedback from our internal stakeholders (our Google advisors and teaching team), we decided to pursue the direction that my idea had sparked.

We had several other candidates, one of which was a tool for AI-powered identity obfuscation, to prevent doxxing, harassment, and stalking of user who post videos. I felt we had not interviewed enough users as creators for it to be well-founded in our primary research. I was ultimately successful in championing the Claims + Associations idea.

Iteration

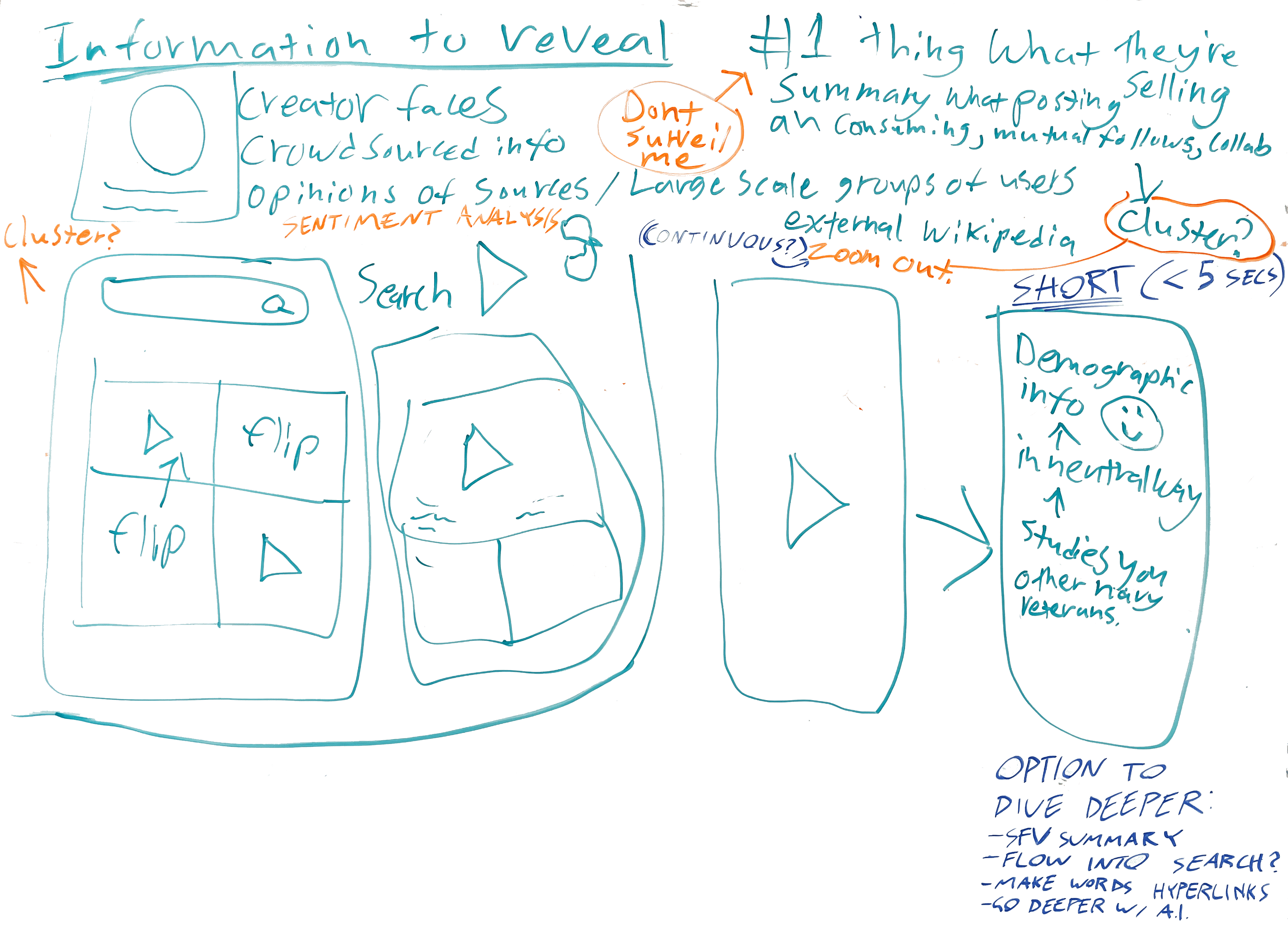

Implementation Ideation

What Will We Make?

Maxi / medi / mini versions

The idea I had pitched was a fairly "blue-sky" concept for an app based on video—a social media platform in 3D space like a video game, similar to Second Life or later metaverse platforms.

MAXIMALIST

Creator associations represented in a 3D environment, which the users navigates by steering an avatar. Difficult to integrate with existing short-form video platform designs, perhaps better suited to AR/VR than mobile.

Medium

Creator associations displayed in 2D space, still communicating connectedness through spatial clustering. 2D-scrollable, like a maps app. This option could conceivably be integrated with existing platform designs.

minimalist

Creator associations and claims displayed on a more traditional page, with 1D scrolling, reached by "flipping over" video. This option was the easiest to integrate with existing platform designs, and had a low learning curve.

Note: I drew all of these as explanatory sketches.

We decided to pursue the minimalist, more traditional UI option.

I thought this was the best choice for a few reasons:

Feasibility

I wanted the product to be as grounded as possible; we had set out to create a product that could realistically exist in the next 1–2 years.

Usability / Learning Curve

Related to the above, I wanted to ensure that users could pick up our new product or feature with minimal training. As such, the interface design had to be familiar, not "avant-garde."

Information Design Comes First

I was conscious of our design brief: design for the information ecosystem. I wanted to make sure that the design of the information system came through as the primary designed aspect of our feature. I felt, and our internal stakeholders agreed, that employing a more "blue-sky" interface paradigm would muddy the waters and interfere with communicating our core value.

Feature Scoping

What Will It Do? How Will It Do It?

Cartoon of a traditional short-form video interface. Users scroll through a video feed by swiping up.

Self-Imposed Constraints

Features

Concept Evolution

Feature: Creator Associations*

Associations* / Connections

The "connections" feature dives into the affiliations of a creator. Users can see direct collaborators, as well as other creators who have similar views or who are thematically linked.

By understanding these connections, users can make informed decisions about their content consumption.

* "Associations" here doesn't just mean the people the creator has "friended," but also those that have similar views, or are otherwise thematically or semantically related, according to our proposed natural language processing and semantic analysis algorithm (functioning somewhat like current recommendation algorithms).

Whiteboard exploration of features and information to include in Connections. Despite not being the lead designer on the Connections portion, I helped inform its design, e.g. helping decide the final information hierarchy.

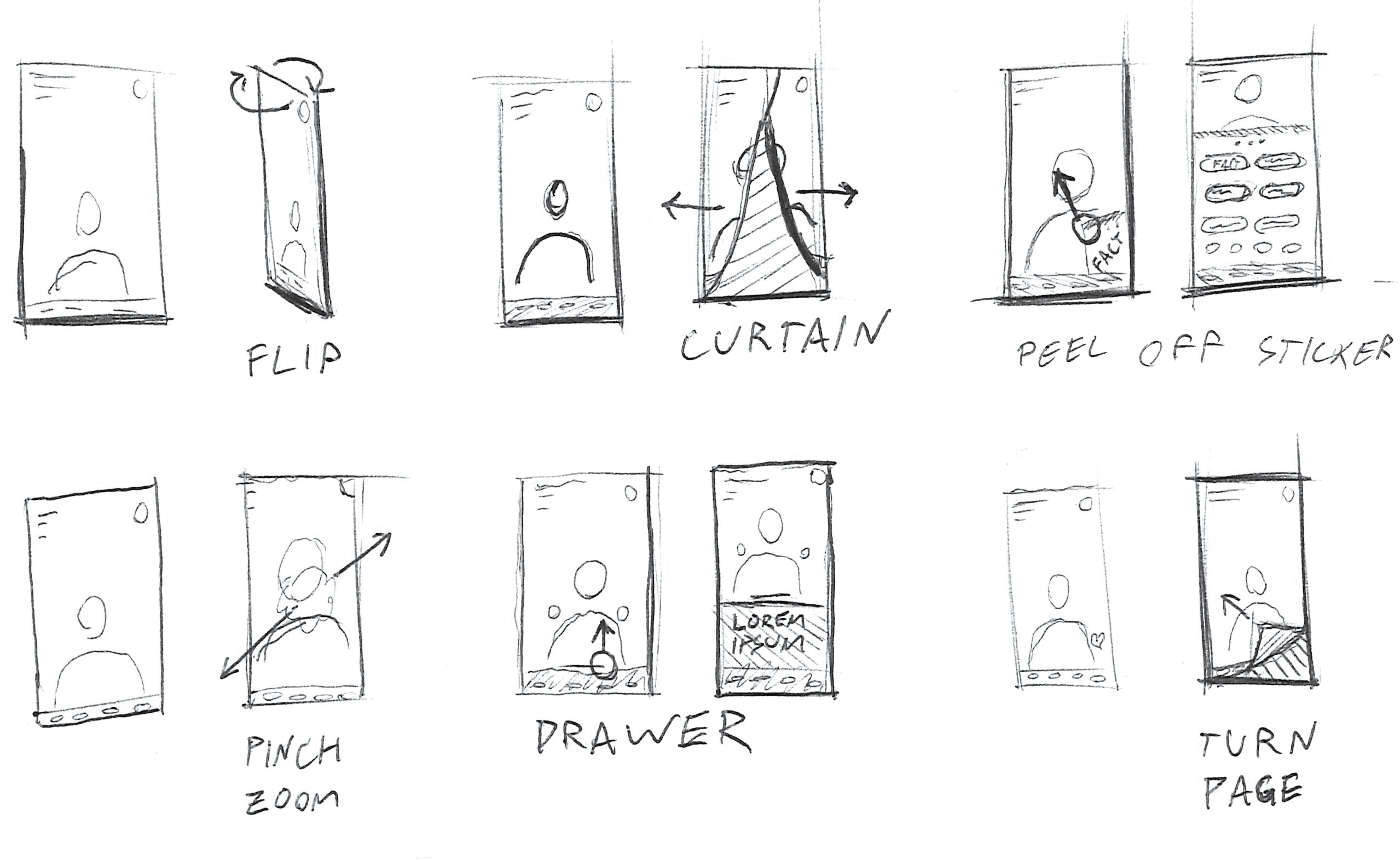

Feature: Flip Transition

Managing User Attention

I developed the "Flip" transition to serve multiple purposes.

Allows users to seek more information without leaving the platform and breaking their flow. Therefore, users will perceive less of an interruption, increasing the feature's use.

"I would go and Google it to learn more like of the nitty gritty."

–P9, on leaving their app to verify something on GoogleThe Flip metaphor implies "unveiling" a deeper or hidden meaning.

The "Flip" interaction uses motion design to create a more pleasurable, novel, fun experience, making users more willing to break their flow to use the feature.

Some of my sketches, brainstorming different "reveal" interactions.

I designed the Flip to be fun and fidgety, something users would like to play with.

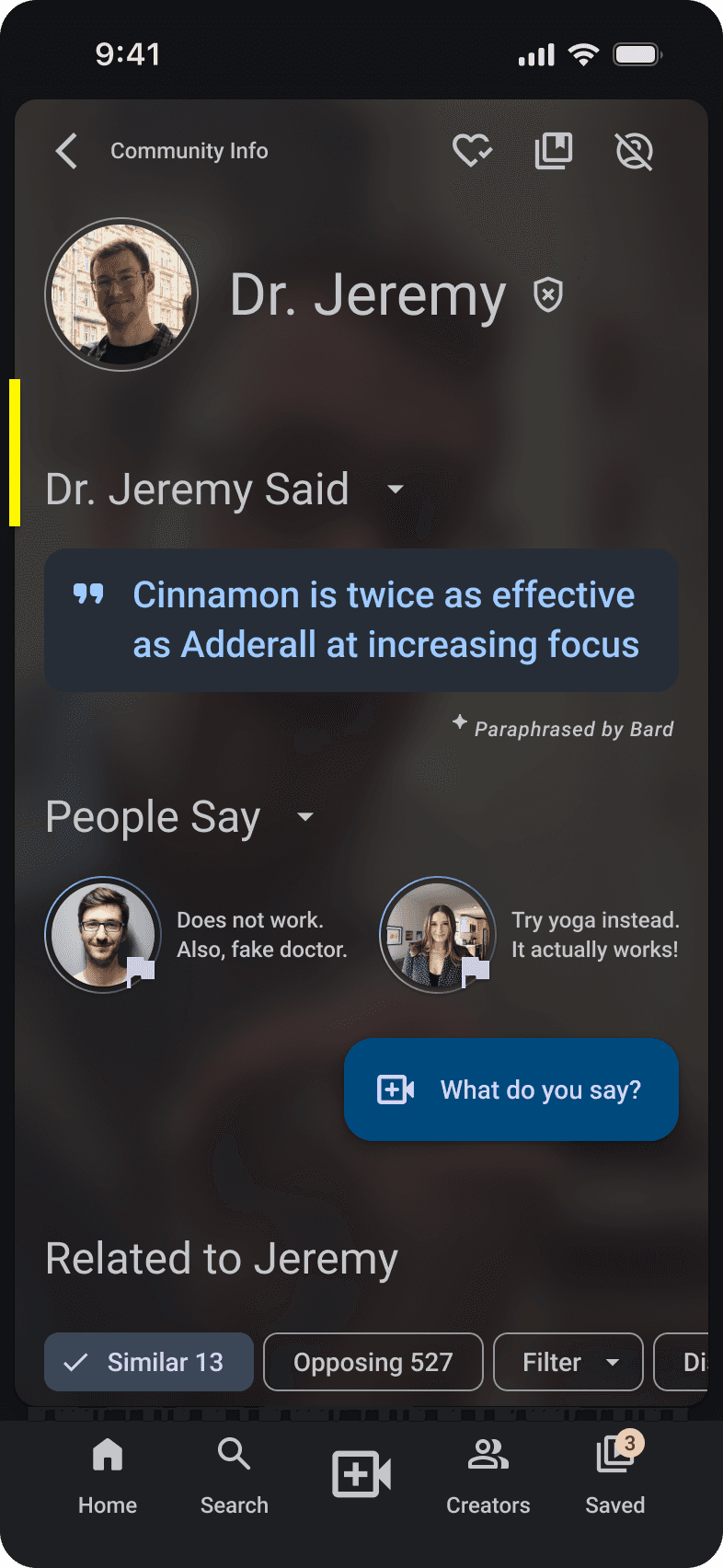

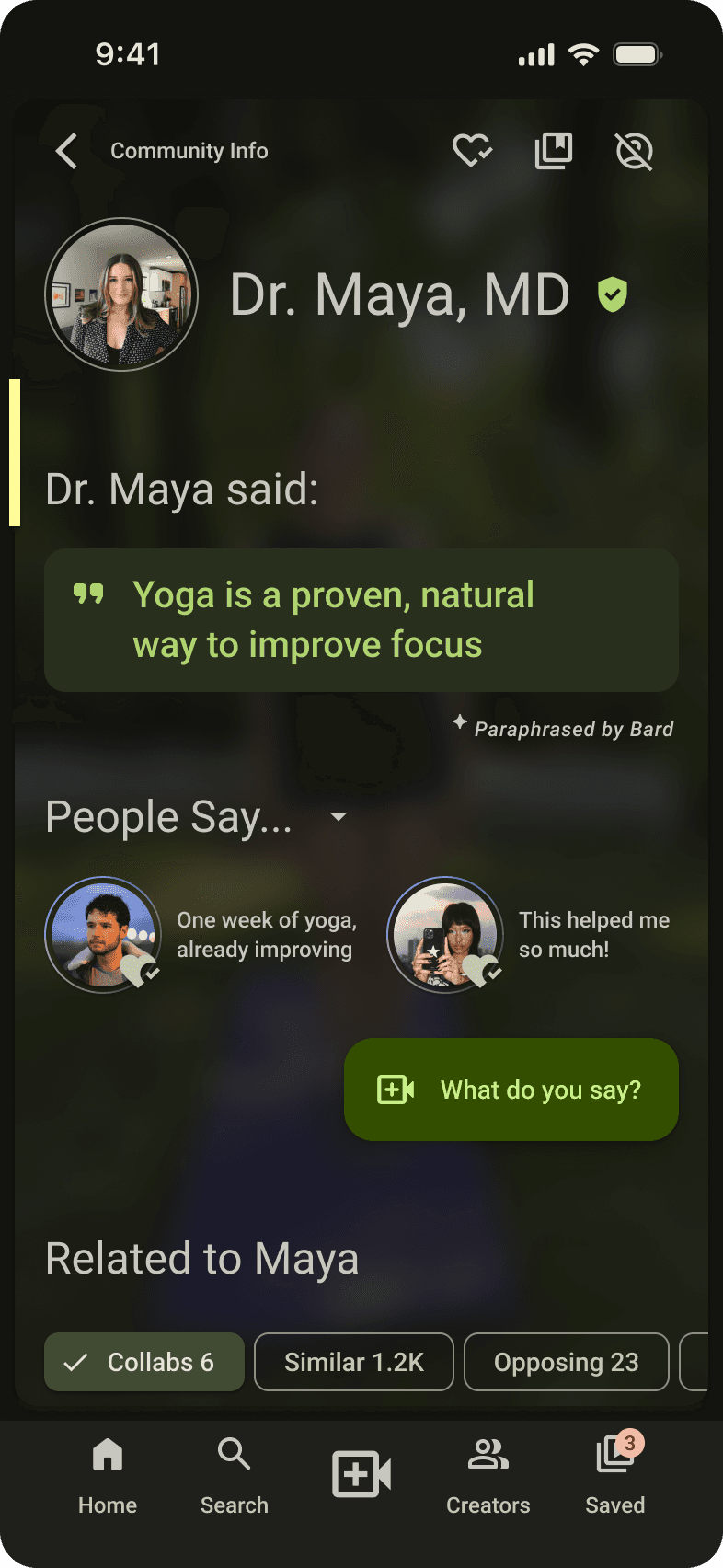

Feature: Claims Summary

Highlighting Specific Claims

I conceived and led the design of the Claims section.

The concept would highlight the most important claims in the video, notifying users that a claim has been made, when they might not otherwise notice.

One of our main research findings was that young adults often don't notice when a creator shifts from discussing personal experience or opinion to making empirical claims of fact. This feature would use natural language processing and keyphrase extraction to identify these moments, and highlight the claims being made for users.

“I wouldn't say that it is or is not trustworthy. I feel like a lot of this stuff is like people talking about their own experiences... it's kind of like the nature of TikTok as a medium.” – P2, on learning new “insider” information on Instagram Reels

An early prototype demonstrating the generative text effect to communicate AI use. I realized this paragraph claims summary was far too long to read, and replaced it with a simpler summary.

Outcome

Concept Video

This short video explains the concept, following an example user journey.

Final Design

Flip Transition

The "Flip" interaction uses motion design to create a more pleasurable, rewarding, fun experience, making users more willing to break their flow to use the feature.

Demo video showing the Flip Tab Indicator and the Flip motion.

Final Design

Claims Section

Highlights the most important claims in the video, notifying users that a claim has been made, when they might not otherwise notice.

Encourages young adults to reflect on content and avoid accepting misleading information. Based on my finding that young adults may not notice when opinions become claims of fact.

The "People Say" subsection presents videos from users with similar or contrasting viewpoints to the claim in question.

I added this subsection based on the finding that young adults often rely on peer platform users to determine truth.

Therefore, I believed young adults would react more favorably to peer platform users' viewpoints than to direct fact-checking by the platform.

"People Say" can show users who have responded directly to the claim video, or relevant viewpoints from across the platform, from users who may have never seen this particular video.

Rationale

The Claims feature sidesteps the "backfire effect" by highlighting when claims are made in a non-judgmental way, and showing the user responses by other platform users, not by the platform.

We found in our secondary and primary research that young adults "crowdsource credibility,"³ relying on peer networks (including comment sections) to engage in collective sense-making when evaluating information.

Further, our literature review found evidence that directly contradicting misinformation—that is, "fact-checking," as it is commonly employed and understood—is usually ineffective or even harmful. One study found that a majority of people feel social media platforms engaging in direct fact-checking to be "judgmental, paternalistic, and against the platform ethos." ⁴

Final Design

Dynamic Color System

I implemented a dynamic color system for Flipside to maintain continuity from the source video.

The Flip Side background is a blurred and value-shifted mirror image of the video that was playing.

The UI elements on the page use key colors extracted from the video.

These techniques combine to maintain context for users, preserving the metaphor that the video is a card that has been flipped over.

I hypothesize that users will be more willing to "Flip" away from their video feed if they can be assured that they can easily get back to their video at any time.

The dynamic color system extracts UI colors from the source video, assuring the user that they can always return to watching.

Final Design

Connections Section

While my teammate led the design for the Connections section, I helped optimize the information hierarchy.

We designed this feature in response to the finding that young adults often trust SFV based on the perceived authenticity of the creator, a finding I helped derive from our research.

I helped determine the best information hierarchy, particularly around simplifying the information on the first screen down to the bare essentials.

We thought about putting a subsection at the top displaying a creator's product sponsorships, but I successfully advocated for giving this information less prominence, and instead putting the related creators at the top.

I felt this information was more crucial to making a snap judgement about a creator, based on interviews I conducted indicating that young adults often don't distrust creators based on the products they endorse.

The connections section allows users to judge a creator by the "digital company" they keep.

Evaluation

How do we measure success?

Retention

The count of users who continue to use FlipSide after their first session. Do they prefer FlipSide, or the standard SFV experience?

Flipping & Session Duration

Are users who flip more engaged with the platform? Are users spending more time on the FlipSide or main video scroll? What aspects of our platform are the most engaging?

What are the common attributes of videos that get flipped on? Perhaps survey some users asking, “why did you flip on this video?”

Flagging, Liking, and Saving

Monitor how flagging is used. Does flagging guide users to a more desirable space overtime? Are we presenting users with desirable content? Do our AI-generated summaries seem accurate and trustworthy?

A user blocks content from their feed after distrusting the creator.

Reflection

What I Learned

How to effectively advocate for my ideas using metaphors, sketching, and other aids to communicate with and persuade diverse stakeholders

Motion design skills improved

Creating functional prototypes

Deepened my understanding of mobile design principles

What I'd Do Differently

We spoke to some users who were also creators, but I wish we had had time to interview creators in depth on their experiences, and their feelings on our response.

On occasion I felt our group was too reactive to feedback, and I learned the value of acting as a “filter” for feedback.

Our response relies heavily on proper tuning of our algorithms for extracting claims from videos, pulling responses to those claims, and displaying associated creators. In industry, I would have worked with engineers to have a better grasp of the technical challenges involved.

Opportunity Areas

More Evaluative Testing

We did not have time to perform extended, formal evaluative testing. We performed some rapid tests with people we knew, but there is a lot of data I would like to collect from testing with real users.

For instance, I'd like to test the Flip against putting the claims / connections information "inline" with the video feed, such that it's reached with the same vertical swipe. Which option results in greater engagement with the information on the page?

Explore GenAI Tagging

I had initially considered a badge or other means of communicating that the platform detected the use of generative AI in a video. I decided not to pursue this angle in order to prioritize what I felt were the more novel and valuable aspects of the project.

However, I would like to explore how best to communicate to users that generative AI has been used (if the platform is able to detect it).

Interview Creators As Creators

We talked to users who also create content, but we did not interview them specifically as creators.

I would like to ask creators explicitly how our response would affect them, and how they feel about the concept.

Would 3D Work?

My initial concept for an immersive 3D social media interface received a lot of positive feedback. Although we decided to go in a different direction, I would be excited to pursue a more "blue sky" interface paradigm, perhaps using VR/AR.

Citations

[↑] Radesky, J., Weeks, H.M., Schaller, A., Robb, M., Mann, S., and Lenhart, A. (2023). Constant Companion: A Week in the Life of a Young Person's Smartphone Use. San Francisco, CA: Common Sense. [ORIGINAL LINK] [ARCHIVE]

[↑] Perez, S. (2023, May 24). TikTok is crushing YouTube in annual study of kids’ and teens’ app usage. TechCrunch. https://techcrunch.com/2023/02/07/tiktok-is-crushing-youtube-in-annual-study-of-kids-and-teens-app-usage/ [ORIGINAL LINK] [ARCHIVE]

[↑] Hassoun, A., Beacock, I., Consolvo, S., Goldberg, B., Kelley, P. G., & Russell, D. M. (2023). Practicing information sensibility: How gen Z engages with online information. Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems. https://doi.org/10.1145/3544548.3581328 [ORIGINAL LINK] [ARCHIVE]

[↑] Saltz, E., Leibowicz, C. R., & Wardle, C. (2021). Encounters with visual misinformation and labels across platforms. Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems. https://doi.org/10.1145/3411763.3451807 [ORIGINAL LINK] [ARCHIVE]

[↑] Boyd, Danah. “You Think You Want Media Literacy… Do You?” Data & Society: Points, 9 Mar. 2018, https://medium.com/datasociety-points/you-think-you-want-media-literacy-do-you-7cad6af18ec2. Accessed 2023. [ORIGINAL LINK] [ARCHIVE]